A guide to the field of Deep Learning

The field of Machine Learning is huge. You can easily be overwhelmed by the amount of information out there. To not get lost, the following list helps you estimate where you are. It provides an outline of the vast Deep Learning space.

The field of Machine Learning is huge. You can easily be overwhelmed by the amount of information out there. To not get lost, the following list helps you estimate where you are. It provides an outline of the vast Deep Learning space and does not emphasize certain resources. Where appropriate, I have included clues to help you orientate.

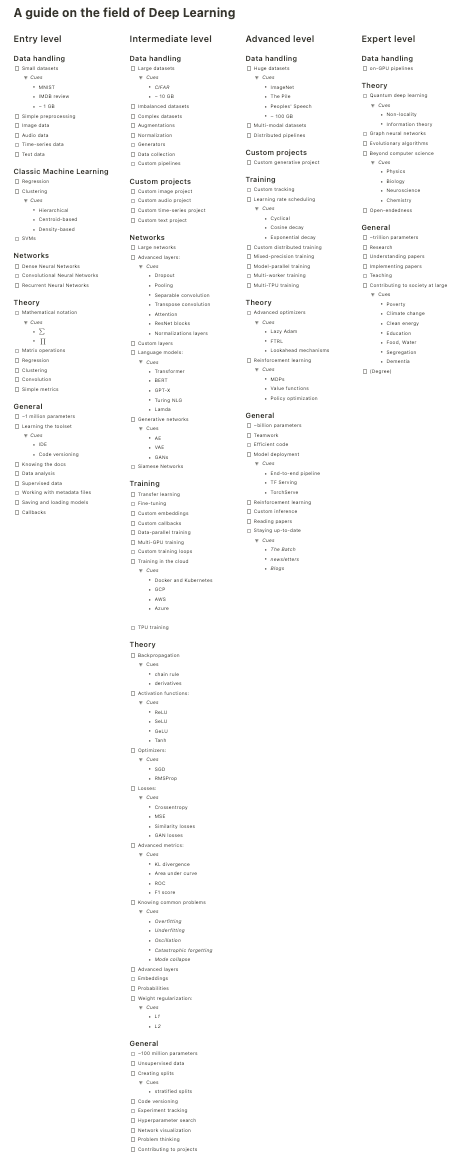

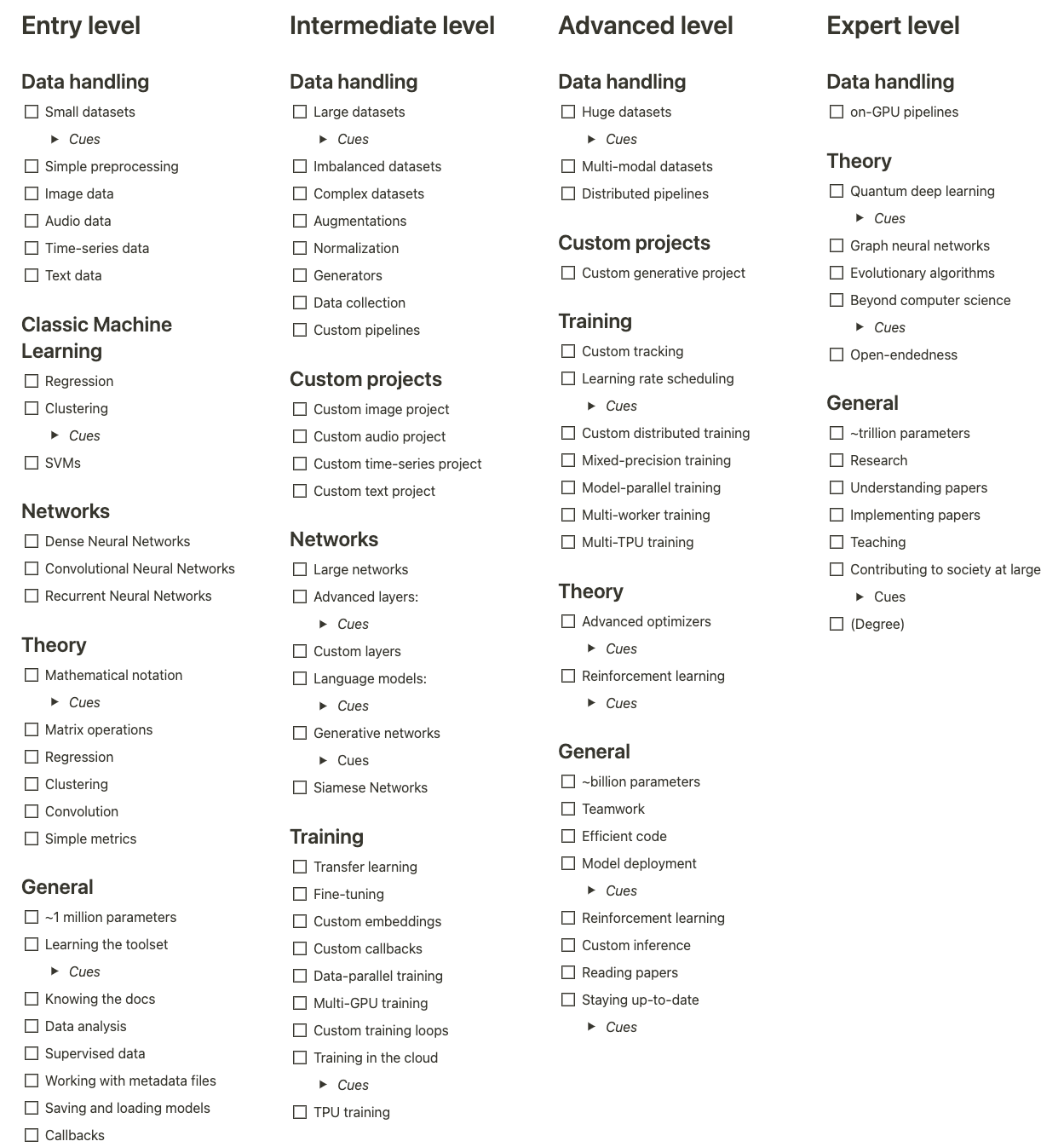

Since the list has gotten rather long, I have included an excerpt above; the full list is at the bottom of this post.

Entry level

The entry-level is split into 5 categories:

- Data handling introduces you to small datasets

- Classic Machine Learning covers key concepts of classic Machine Learning techniques

- Networks covers the classic DNNs, CNNs, and RNNs

- Theory lists the concepts behind the above categories

- General lists the main things you encounter at this stage

Data handling

At the entry level, the datasets used are small. Often, they easily fit into the main memory. If they don’t already come pre-processed then it’s only a few lines of code to apply such operations. Mainly you’ll do so for the major domains Audio, Image, Time-series, and Text.

Classic Machine Learning

Before diving into the large field of Deep Learning it’s a good choice to study the basic techniques. These include regression, clustering, and SVMs. Of the listed algorithms, only the SVM might be a bit more tricky. Don’t let yourself be overwhelmed by this: Give it a try, and then move on.

Networks

No Deep Learning without its most important ingredient: Neural networks in all variants; GANs, AEs, VAEs, Transformers, DNNs, CNNs, RNNs, and many more. But there’s no need to cover everything yet. At this stage, it’s sufficient to have a look at the last three keywords.

Theory

No Deep Learning without neural networks, and no neural networks without (mathematical) theory. You can begin the learning by getting to know mathematical notation. A bit scary at first, you’ll soon begin to embrace the concise brevity. Once you grasp it, look at matrix operations, a central concept behind neural networks.

And with this knowledge, you can then proceed to the convolution operations, another central concept.

Put simply, you move a matrix over another matrix and calculate the inner product between the overlapping areas. There are many variants — keep learning and you’ll naturally use them, too!

General

You can rest assured that you can use all types of networks on your home machine at the entry level. The typical number of parameters will be around one million, and sufficient for your tasks. Mainly, you’ll concentrate not on getting a network to run but also learning things around them. This included learning your tools, consulting the documentation, analysing data, and so on.

Intermediate level

There’s no real dividing line between the intermediate and the former entry level. You’ll mainly notice this by handling larger datasets, working on custom projects with advanced networks, and coming up with better ways to kick of training. Everything that you’ll encounter at this stage builds on your previous work:

- Data handling focuses on larger datasets

- Custom projects are what you’ll work on

- Networks become more advanced

- Training lets you dive deeper into training a network

- Theory focuses on expanding your background knowledge

- General lists several items that you work with at this level

Data handling

Once you work with datasets of a few GBs you’ll solve new problems. Getting them from the drive fast enough is critical, this might involve normalization techniques and custom pipelines. Since the datasets tend to become more complex you might have to tinker about augmentations, custom generators, or collecting additional data.

Custom projects

As in my previous posts, custom projects are the heart of the intermediate level. Custom means to work on your own tasks, no longer following tutorials about MNIST. This involves all major domains and creates tremendous synergies for the upcoming categories.

Networks

Working on custom projects, you’ll naturally get your hands on larger networks. These networks often feature advanced layers to take over the tedious tasks. Normalizing data? Just use a BatchNormalization layer for this. But what do you do if there’s no layer yet for your task? The answer is simple: You write a custom layer.

Besides advanced layers, advanced architectures are also a thing at this stage. The normal dense networks are slowly replaced by sophisticated language models, CNNs are used to generate images in generative networks, and Siamese networks are handy for your imbalanced datasets.

Training

Working with a variety of networks can introduce you to new techniques to get started more quickly. One of these is transfer learning, where you use a network trained on a different task for your problem. Fine-tuning goes in a similar direction and means freezing the majority of weights. Only the remaining “non-frozen” weights are updated

The previous two techniques are also used in the concept of embeddings. Embeddings are a truly smart way to represent additional knowledge. Think about a tree. A tree is more than just leaves and wood. A tree cleanses the air, gives shade, and offers a home for all sorts of animals. Put different, “tree” is not just a word, but a concept, involving all kind of additional information. And you can incorporate such information by using embeddings.

There’s more to learn from this category, embeddings are just a start. If you have a complicated dataset you can write your own callbacks to control the training progress. Or you switch to multi-GPU training, where the model is replicated on different devices, and the updates are synchronized.

Going one step further, you can combine this with custom training loops, presumably in the cloud (since only a few people have more than one GPU at their hand). And if you need serious power then use TPUs.

Theory

All the concepts you have seen so far also include an underlying theory. Learning the theoretical aspect must not involve complicated mathematics, but gaining an understanding of how things work.

In the case of neural networks, this driving factor is the backpropagation algorithm. Analytically determining the updates usually is computationally infeasible. The trick is to propagate the updates back by applying the chain rule of derivation countless times. You can even do this by hand!

The derivatives are influenced by activation functions and are finally applied with the help of optimizers. Given some loss functions, these optimizers make sure that you’ll do better with each step. Observing this progress involves some objective functions to assess the goodness of the current weights. This is where metrics come into play, and since you are at the intermediate level already you’ll encounter some advanced ones.

All that you have learned so far has also introduced you to common problems in the optimization of neural networks. Some techniques exist to alleviate them, have a look at the theory behind advanced (normalization) layers. Embeddings are not layers in that sense, but still an important part of natural language processing. For generative networks, the counterpart would be probability calculations.

General

Going from lightweight to advanced networks is accompanied by more parameters. You will now mainly work with models of around 100, 200 million parameters. Training such networks is doable, yes, but might require you to switch to faster or more hardware.

A driving factor that largely determines the training time is the dataset you use. Smaller datasets go through faster, larger datasets take longer. You might also deal with unsupervised data now, or have to create custom dataset splits.

Once you write custom code for the previous tasks it becomes beneficial to use code versioning systems. The last thing you want to encounter is a working code that breaks with a few changes and can’t be rolled back. A similar problem is prevented when you do experiment tracking. As soon as you reach good metrics, you want to know why, and tools like Weights&Biases help you here.

Now that you work on a variety of topics, all with their own challenges, you hone your ability to think through ML problems. This is a useful byproduct: You use what you have learned in a different context before for a new problem now. And once you solved it, why not contribute to some projects on GitHub?

Advanced level

Compared to the previous intermediate level, you now shift to even larger datasets, add generative networks in the mix, and examine various techniques to speed up your training. The advanced level is split into five categories:

- Data Handling requires you to process large and complex datasets

- Custom projects now includes generative networks

- Training explores shortening training times

- Theory includes advanced optimizers and Reinforcement Learning

- General collects a broad range of things you’ll learn

Data handling

Going from the intermediate to the advanced level includes handling larger datasets. Whereas the size previously has been around tens of gigabytes, you now encounter hundreds of gigabytes, a factor of 10 larger. Rest assured, your processing pipelines will still work — but you might want to distribute them, to speed things up.

Another topic at this stage is multi-modal datasets. This means that the dataset contains samples from multiple domains: images combined with a textual description, for example.

Custom projects

Additionally to your previous projects, you now explore the fascinating world of generative networks. For this, you can start with AutoEncoders, and then transition to GANs and their derivatives.

Training

The training category now includes advanced techniques to improve results and minimize training times. To verify the success of your training, you rely on accurate tracking methods. For simple metrics, a progress bar might be enough. But with more to track and advanced metrics coming in, it easily gets convoluted and complicated to understand. The solution is to write a custom tracking routine. You don’t have to start from scratch though, tools like TensorBoard and Weights&Biases make logging easy.

Once you hit a plateau (put simply, the scores are no longer increasing), you can try out scheduling the learning rate. Rather than having the learning rate fixed, you adapt it during training. This can involve dividing the learning rate by a constant factor, or cycling it between two boundaries. Try it out and see what works for your case.

When a single machine won’t do it anymore you can distribute the workload. Combine it with mixed-precision training to get further speed.

If your model or its activations grow too large for a single device, you can resort to model-parallel training. In data-parallel training, your model is replicated, the data is sharded to the replicas, and the weight updates are synchronized. In model-parallel training, you split the model and place its layers on different devices.

This can be combined with a multi-worker setup, where the different devices are not merely different local GPUs, but from different workers. The next logical step would then be taking advantage of multiple TPU workers. That introduces severe speed — make sure that your code is efficient, though.

Theory

The theory at this stage begins with advanced optimizers. Everybody knows and uses the default ones, but there are many contributions beyond that. Just have a look at the available optimizers from TensorFlow Addons and be inspired. You can combine optimizers with weight decay, average the updates, or update only some variables at all.

There’s also the field of Reinforcement Learning waiting for you. While it’s not a difficult topic, it’s just too much to learn early on. But once you are equipped with mathematical understanding (entry level) and the knowledge from the intermediate level you are ready to give it a try.

General

In terms of the number of parameters, you are now handling up to a billion parameters. It’s needless to say that this is not a one-person project anymore. Setting up such large-scale networks requires both teamwork and efficient code. And once you have successfully trained those billion-parameter model (or any model, that is), you can deploy it.

But there’s still more. Some rumours denote that there are a hundred ML papers published — each day. Staying up-to-date is impossible. But you can at least make this easier by reading papers and subscribing to selected newsletters. The blogs of large (tech) companies are also a good source of information. As far as my resources are concerned, I enjoy Andrew Ng’s The Batch newsletter, TensorFlow’s blog, Sebastian Ruder’s news on NLP, and occasionally visit DeepMind’s research page.

Expert level

To be clear, the further you get on your journey, the vaguer the boundaries get. All the following interesting things are building on what you have learned before. There is nothing completely new — except (partly) leaving the CS field or visiting uncharted terrain.

The expert level contains three broad categories:

- Data handling adds on-GPU pipelines

- Theory introduces quantum DL and going beyond CS

- General extends to trillion parameter models

Data handling

This is a short category, it features on-GPU pipelines as the sole item. The majority of pipelines can be easily set up to run on CPUs. Getting them to work on GPUs is another story. I remember reading a paper on GPU programming, I guess it was from around 2010, where the authors described how they used Nvidia’s CUDA to code. Much progress has since been made, with the advance of JAX another opportunity has emerged.

Do you need an on-GPU pipeline? If data pre-processing becomes a bottleneck, then yes.

Theory

At this level, you surely know what your interests are. To give you some ideas, you can check out Quantum physics, graph neural networks, and evolutionary algorithms. It’s going beyond computer science now.

And if you are still curious, read about open-endedness.

General

Hornik’s Universal Approximation theorem states that a function can be approximated with a single layer network with a reasonably small deviation from the desired reference function. The challenge is finding this approximation. In practice, deeper networks tend to work better. Therefore, you can try those trillion parameter models.

Training such large architectures can also be part of your research. Research is not confined to the “expert” level, it’s more the foundational knowledge that matters. The same holds for understanding papers (well, most of them), implementing papers and teaching.

The biggest challenge of all levels is contributing to society at large. Not merely speeding up convolutions from 2 seconds to 1 second, but going 10 steps further and solving the world’s pressing problems. This is not restricted to computer scientists but requires the combined effort of many (scientific) domains. It’s teamwork. The cues I have listed are as usual non-exhaustive, they are written as guideposts.

Where to go next?

This is a detailed yet non-detailed overview of the field of deep learning. Detailed, because I attempted to create an overview of the several stages along with their typical items. Non-detailed, since there’s always something further to be included.

With this said, there are several resources:

- For the entry level you can check out the TensorFlow Developer Professional Certificate

- Berkley’s Full Stack Deep Learning course

- DeepMind’s Advanced Deep Learning & Reinforcement Learning lecture

- TensorFlow: Data and Deployment Specialization and TensorFlow: Advanced Techniques Specialization

If you have reasonably further things to include please let me know.

Further notes

- I definitely have forgotten something mission-critical

- This is heavily biased by my own experience

- This work used my post on tracking ML progress as a base; I expanded it and added further notes

- This is the full list in its entirety: